Mage.ai is one of the solid alternatives to Apache Airflow. It is a lighter and, from my perspective, much more user-friendly option for working with ETL data flows. It includes a wide range of predefined connectors to many sources/destinations (based on Python, SQL), so you don’t have to write code from scratch. Development is thus time-efficient. However, Mage can do much more.

Mage.ai is one of the solid alternatives to Apache Airflow. It is a lighter and, from my perspective, much more user-friendly option for working with ETL data flows. It includes a wide range of predefined connectors to many sources/destinations (based on Python, SQL), so you don’t have to write code from scratch. Development is thus time-efficient. However, Mage can do much more.

Mage.ai – Installation (Anaconda, Windows)

- Install Python – https://www.python.org/

- Install Anaconda – https://www.anaconda.com/download

- Open cmd as an administrator

- conda create –name mage-ai python=3.8

- conda activate mage-ai

- conda install pyodbc

- Install Mage in the new environment

- Create a directory for your Mage project somewhere on your disk

- Initialization or running of Mage is done through the command below in cmd, where the last word is the Mage project (if it doesn’t exist, it’s created, if it exists, it’s started)

- Mage instance runs at http://localhost:6789/

Tutorials for data wizards:

Mage.ai File Structure

- Mage has a logical directory structure (similar to dbt)

- All configurations for data sources/targets are in the io_config.yaml file. Here are also setting templates for various databases

Structure (most important components)

- Pipelines folder – contains data pipelines composed of blocks

- Block types (within a pipeline) – the same blocks can be used in different pipelines, with many templates (python/SQL) – scripts don’t have to be written from scratch

- data loaders – blocks that load data (connect to a system and extract data). They use one of the connections configured in io_config

- transformers – take data from data loaders and transform it.

- data exporters – exporters are blocks that upload data to the destination

- dbt – a special component, where Mage allows integration with dbt – possible only when Mage is running using Docker.

- sensor – checks at regular intervals if a condition is met and if so, takes action – for example, checks if a file is in a folder, etc.

- Charts – contain graphical representation of data – various data quality checks, and more

- Dbt – dbt folder is empty by default. You can initialize a dbt project in it following the instructions – ETL | Mage.ai – Dbt Installation (pip/conda) and project initialization

Mage.ai Functionalities and Use Cases

- ETL processes – data pumps. Many predefined connectors + detailed logging, scheduling

- Predefined blocks for various systems

- Predefined graphs for various scenarios – testing, data quality

- Detailed logging

- File manager

- Integration with Dbt (running pipelines and running dbt depending on completion)

- ETL creation using AI (connecting to Chat GPT API)

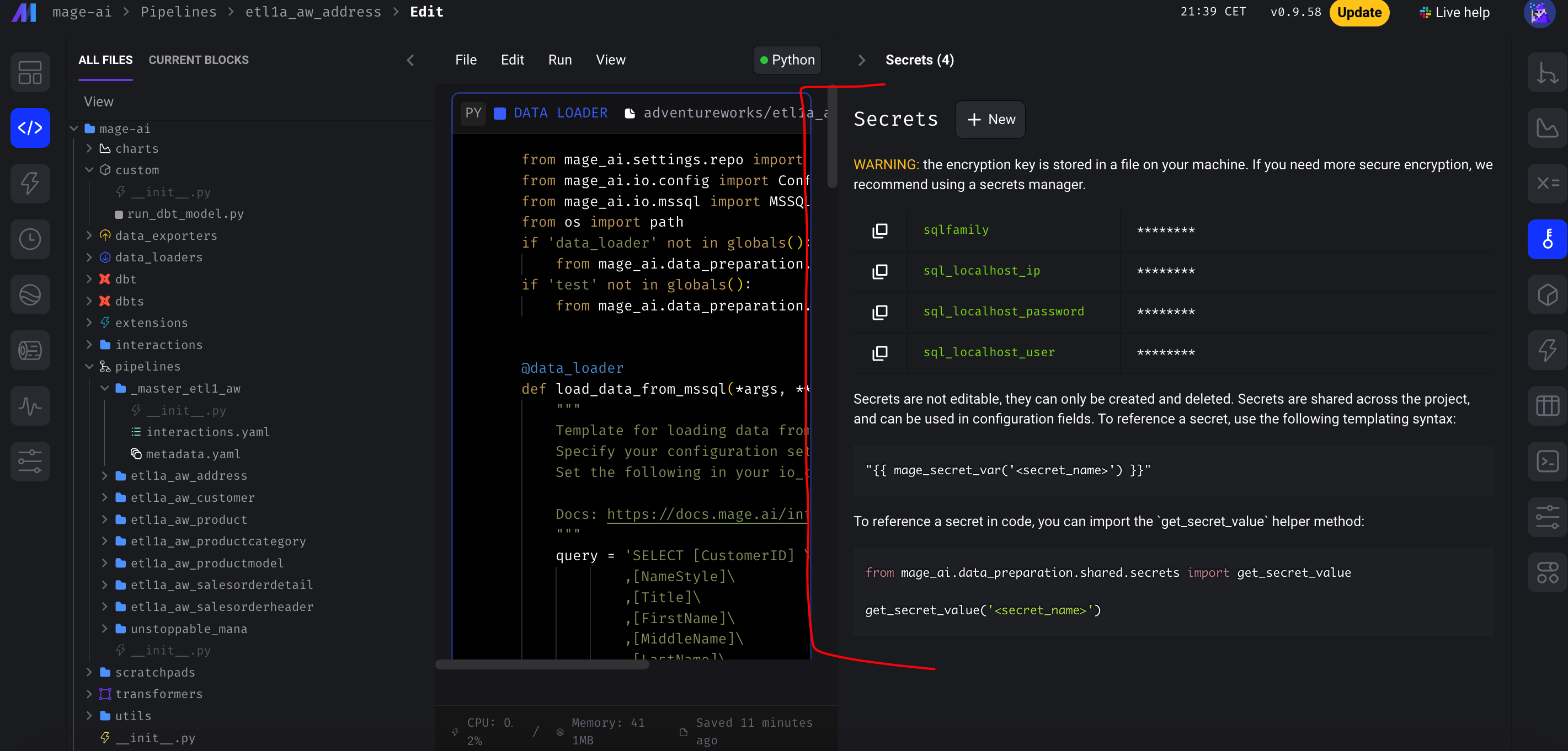

- Secrets management

- Authentication options (default is off – used locally after installation, but can be enabled if running on a server)

- Automatic integration with Git – clone/commit/pull/push/tracking changes

- Integrated terminal

- Predefined charts and summaries for datasets – testing and data exploration

- Ability to run pipelines through an API

- Variables, local and global

- Active and growing community

- Good documentation

Troubleshooting and Error fix

So far, while exploring the tool, I’ve encountered the following issues:

In this guide, we will take a look at the features that Mage.ai offers for data analysis. While this tool is primarily used for ETL pipelines, it also includes...

In this guide, we will take a look at how to configure the io_config.yaml file in Mage.ai. We will also explore how to hide and encrypt access passwords so...

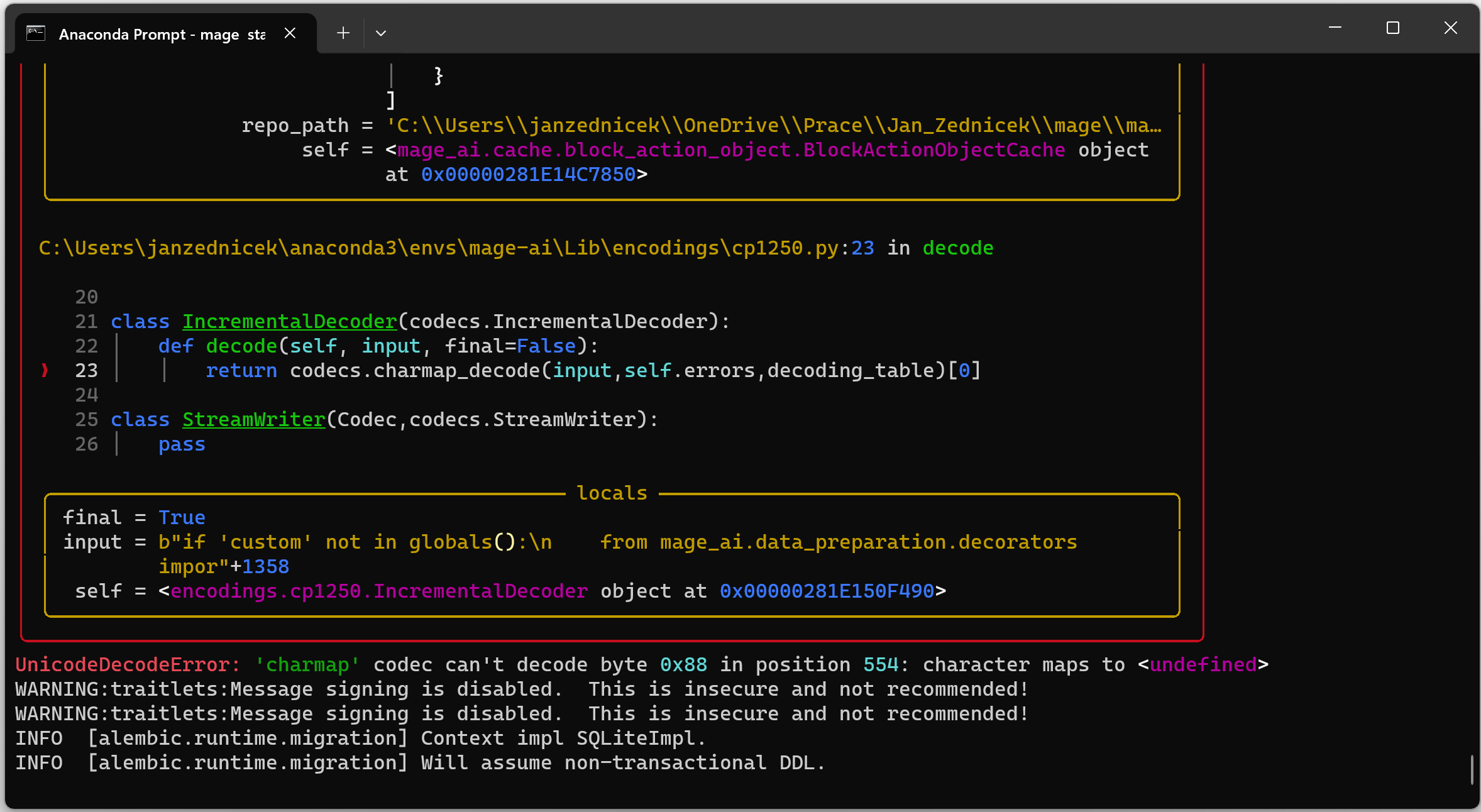

This article will be related to troubleshooting. Today, I managed somehow to write a comment that caused the entire Mage.ai instance to crash due to a UnicodeDecodeError. How did...

In the previous article – ETL | Mage.ai – Solid Alternative to Airflow – Intro and Installation we introduced the ETL tool Mage.ai as a lighter alternative to Apache...

In a recent article dedicated to introducing Mage.ai – a tool for creating and managing ETL processes, I promised at the end that we would try to create a...

Today, I attempted to install Mage.ai via Docker as part of my familiarization with Mage.ai. This is currently (as of 2024-01-26) the only scenario for running Dbt together with...

Mage.ai is a great tool for data nerds, but it’s not completely done when it comes to user experience just yet. We might encounter some errors that are inherently...

The previous article focused on installing dbt in the Mage.ai environment or independently, followed by the initialization of a project named mage_dbt – Dbt Installation (pip/conda) and project initialization....

I mainly use SSIS (SQL Server Integration Services) as the tool for creating ETL pipelines. But in the data warehouse world, we’re shifting more from on-prem solutions to the...

![[Errno 2] No such file or directory](https://janzednicek.cz/wp-content/uploads/2024/01/Screenshot-2024-01-25-at-23.41.32.png)