Snowflake is a cloud platform for data storage and analysis, offering a wide range of services for working with data – data warehouse, data lake, data science, and data sharing. It was founded in 2012 and has since spread very quickly thanks to its ability to efficiently process large volumes of data, unique architecture, and scalability of solutions. This makes it suitable for both small organizations and enterprise clients. The service is paid for on a pay-as-you-go basis. This means that with Snowflake, the price corresponds to what you actively use. Thanks to the architecture, cheap data and more expensive computing power, which can also be further divided (see below), are separated in terms of payment. Understanding the structure of Snowflake is important for a correct understanding of the service’s pricing. You can try Snowflake for free in a Trial version for 30 days up to a limit of 400 USD, which corresponds to the Standard edition.

Snowflake Price and Credits – Simplified

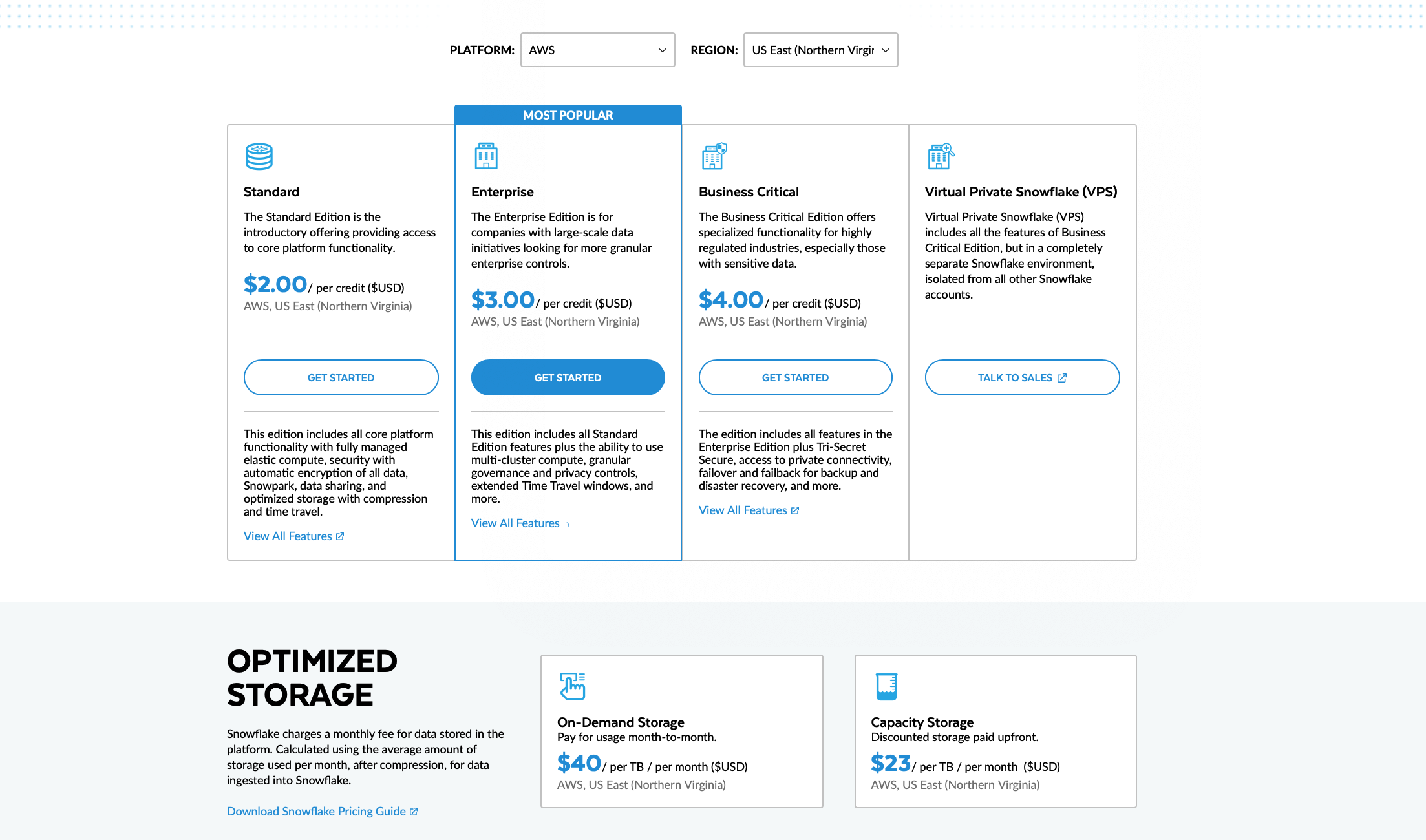

As I wrote, understanding the principles of pricing is important to understand the architecture thoroughly, which is decentralized. It can be said that the price (pricing) of Snowflake is a complex matter, but in principle, it is based on 4 main variables – storage, computing power, edition, and the platform/region of your instance. You can find the page with pricing under the link. It all revolves around the price per 1 credit, which has a different value according to the variables I mentioned.

Snowflake credit – In Snowflake, 1 credit corresponds to the consumption of a certain amount of computing power (CPU, memory) for one hour, based on the size and type of virtual warehouse used – more information below in the architecture section. The value and performance that 1 credit represents also vary depending on the specific edition of Snowflake that users use (Standard, Enterprise, Business Critical) and the Platform or Region see the picture.

Storage Price – databases

Here it is quite simple, you pay for stored data and it is about the cheapest part – here are 2 options depending on whether it is a client with variable needs or fixed ones. You can look at how storage works in the chapter on Snowflake architecture. Note. Pricing may vary per region, but not significantly

- Pay as you go – 40 USD per TB of data

- Pre-order 23 USD per TB (about half)

Price by Edition

The price per 1 credit changes depending on which edition we use. You can find the complete list of features that editions provide under the link – Snowflake Editions. The basic edition is Standard, which lacks some advanced features. Go through the differences between editions.

- Standard edition – costs 2 USD per credit

- Enterprise – 3 USD per credit

- Business Critical – 4 USD per credit

- Private Snowflake – custom

Price for Computing Power and Credits – based on warehouse size

Pricing depends on size of your virtual warehouses – i.e., computing units with which you query data. They can be set differently depending on what you need and the larger the warehouse size, the stronger the computing power. Complete information regarding credit consumption depending on the size of the virtual warehouse is here

Price – number of credits per hour of performance

- X-Small – 1 credit/h (Default setting when creating a virtual warehouse)

- Small – 2 credits/h

- Medium – 4 credits/h

- Large – 8 credits/h

- X-Large – 16 credits/h

- 2X-Large – 32 credits/h

- 3X-Large – 64 credits/h

- 4X-Large – 128 credits/h

- 5X-Large – 256 credits/h

- 6X-Large – 512 credits/h

Platform (AWS, Azure, Google) and Region

All prices for computing/storage may slightly vary due to the choice of platform and region – this is variable.

Example of Pricing for a Small Data Warehouse and the Importance of Testing

Let’s make a small example. Let’s have a small data warehouse, which has these parameters

- Size about 2 TB, daily increment stable about 0.5 GB

- The warehouse is calculated once a day always at midnight and the calculation runs for 3 hours of which 1 hour is data extraction using Fivetran and 2 hours are transformations at the level of the target storage into a semantic model.

- From the data warehouse, we then calculate Power BI reports for 1 hour

- During the day there is a need to query the data warehouse (analysts, developers), the total time is on average about 10 hours a day/all

- As for functions – the Standard edition is enough for us

Snowflake initial test price

- Storage – pre-ordered 3 TB – 70 USD/month

- Computing power- total 696 USD/ month

- Price of 1 credit = 2 USD because we have the Standard edition

- Price for 1 hour of computing power = 1 credit because at the beginning we try the lowest Warehouse size = X-Small

- Warehouse calculation overhead = 2h daily (extraction does not use computing) * 30 = 60h month * 2 USD * 1 = 120 USD

- Report calculation overhead = 1h daily * 30 = 30h month * 2 USD * 1 = 60 USD

- User query overhead = 10h daily * 20 = 200h month *2 * 1 = 400 USD

- Reserve 20% = (120+60+400)*0.2 = 116 USD

We estimated that for the beginning, the operation will cost us about 766 USD per month when using the smallest possible size of the virtual warehouse. The solution is scalable because we can change the size of the warehouse at any time and thus improve the overall computing power of the entire solution. If the service settings met our needs, then the price is reasonable because we do not have to own any infrastructure.

If we increase the parameters of the computing unit from X-small to Small, which consumes 2 credits per hour, then the computing part will NOT cost double, because the time needed for calculation is shortened (we use a stronger computing unit). So, it is necessary to play around with it a bit and find the optimal setting of parameters.

Architecture of Snowflake Instance – Storage, Computing, Cloud

In the case of Snowflake, you are buying a Data platform as a service – so you don’t want to deal with any installations, think about what you need or don’t need in terms of server settings, network, etc. Just register and Snowflake will arrange everything.

Your Snowflake instance always runs in the background on one of the main cloud infrastructure providers, however, the biggest advantage is that you don’t have to deal with anything and you have a lot of potential benefits (replication, sharing between providers, etc.) – but when creating an instance you can choose the provider and location (closest to you).

- Google Cloud (minimum clients about 5%)

- AWS

- Microsoft Azure

In Snowflake, all data is stored centrally with the computing power being separated from data storage and is allocated as needed. Below you will find a diagram of the architecture, which consists of several independent parts. This then has the benefits of huge scalability of the entire corporate solution. 1

Database Storage – Data Layer

In the bowels of Snowflake is a centralized data storage (database storage), which stores all data in a format optimized for the cloud. This storage is independent of computing instances and allows storing structured and unstructured data.

Security: Data are encrypted at rest using strong encryption standards – AES-256 with Snowflake ensuring key rotation at regular intervals to strengthen security (without user intervention. During transport, data are encrypted using the TLS (Transport Layer Security) protocol. There are also some options for using your own keys.

The moment we upload something to snowflake, this data is compressed, reorganized (physically), metadata is added, etc. All this is managed by Snowflake. The data is then available for Query processing part (computing). In the case of storage, we pay pay as you go monthly according to the average size of data.

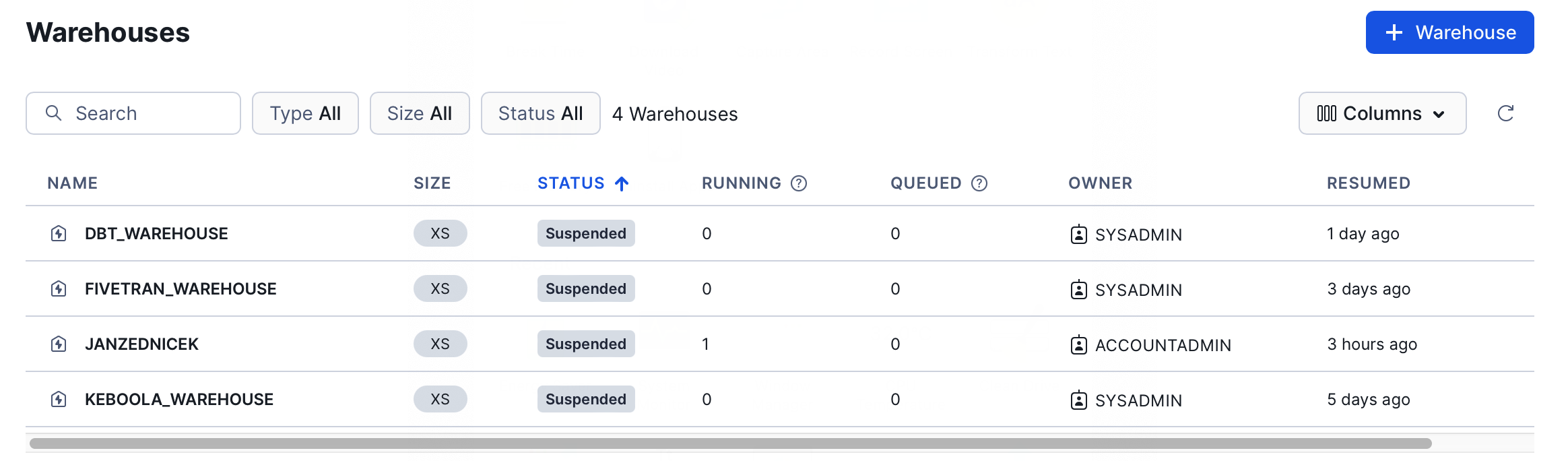

Query Processing – Separate Layer for Computing Power – Size

We come to the second layer which is processing or computing layer. Through this layer, we can access data. Computing power is managed using so-called virtual warehouses. This is a computing instance, thanks to which you query data. You pay according to how long these warehouses are run monthly (they turn off automatically when idle)

You can create as many of these virtual computing units as you want. It has the advantage that you can monitor the costs of the service, for example, according to the purpose (e.g., you give each service or group of users the right to use some warehouse). Warehouse has 2 important parameters in terms of costs and price (besides who owns it, etc.)

- Size – the size of the computing unit

- Status – whether it is running or not

Costs then you can monitor for these computing units (services/users) on the Cost management screen.

Cloud Services – Management

The cloud services layer (Cloud Services Layer) is the control component of the architecture. This layer is responsible for coordinating and managing all operations and processes that take place within Snowflake. This is from the moment the user enters a query until it is processed and the results are returned. This layer is again fully managed by Snowflake and ensures:

- Authentication: Verifies the identities of users and ensures they have permission to access data and services.

- Infrastructure Management: Dynamically allocates and manages computing resources for processing queries and data storage.

- Metadata Management: Keeps information about data structure, schemas, and other objects, facilitates organization and searching of data.

- Query Analysis and Optimization: Improves query performance through intelligent analysis and optimization.

- Access Control: Defines and enforces rules for who can view, edit, or manage data.

Použité zdroje

- Snowflake documentation, Key Concepts & Architecture [online]. [cited 2024-02-13]. Available from WWW: https://docs.snowflake.com/en/user-guide/intro-key-concepts