Table and index compression is a functionality that has been available in various SQL Server editions for a while. It has been available in all editions, including SQL Server Express (for free), since version SQL Server 2016. Data compression allows you to save storage space significantly. This, of course, saves enterprise resources, such as hardware costs, services like backup, and more. Everything has its pros and cons, just like table and index compression.

Where Is SQL Table Compression Suitable?

When it comes to compressed objects, the downside is slightly higher CPU requirements. If you access a compressed object through queries or any DML operations, the object must first be decompressed, then the required operation is performed, and finally, the object is compressed again. For this reason, it is necessary to consider in which environment compression is suitable or not.

OLTP Systems – Not Recommended for Compression

In my opinion, this feature is highly inappropriate for OLTP (transactional) systems, where there is a high frequency of table access through inserts/updates, and operations need to be lightning-fast. Such an environment includes enterprise CRMs or web applications built on .NET, among others. In this case, the data volume is relatively small, and savings, in my opinion, are not realistic – you might save half of the storage (which, in absolute terms, might be a few gigabytes), but at the cost of slowing down the entire system. If we weigh all these factors, the negatives outweigh the positives.

An exception might be a situation where you are using the SQL Express edition for your application, which has a 10 GB storage limitation, and you are faced with a decision to either buy SQL Standard or implement table compression to slightly reduce user comfort when working. In this scenario, I would probably implement compression and test if the application can still work relatively comfortably. Savings can occur in this scenario. The cost of acquiring SQL Server Standard is approximately 100,000 CZK (depends on various factors and may be more or less), which you could save. You must estimate the impact on the application’s performance yourself.

Data Warehouses, Data Marts – Compression Recommended

On the contrary, an extremely suitable use case is for data warehouses and data marts that are computed at certain intervals (typically once a day at night) and then only queries are performed on the objects. Larger data warehouses store tens/hundreds of gigabytes of data daily, and the possibility of saving tens of percent of storage is interesting in absolute terms. Storage space on the disk/disks where SQL Server is located is only part of the benefit. Then we have, of course, backup, which will take less time, etc. The downside could be that the calculation of data for Slowly Changing Dimensions will probably take longer – insert/update operations will take longer.

Types of Table and Index Compression – Row-Level and Page-Level Compression

Basically, there are two ways to perform data compression – row-level and page-level.

Row-Level Data Compression

This brings a smaller compression effect but also consumes less CPU. It reduces the amount of metadata associated with specific records. This method affects numeric data types, dates, and binary data. Text attributes are not affected by this type of table compression. Empty values and strings of empty characters are not stored. Only a minimal number of bytes are stored for data types. If a column of type BIGINT is defined, each number in an uncompressed table occupies 8 bytes. In a compressed table, this number may be lower if the number can be stored more efficiently (e.g., as INT with 4 Bytes).

Page-Level Data Compression

Simplified compression also applies to text strings, and metadata logic is applied, where similar data or their parts are replaced by references, and only data fragments and these references are stored. This method brings greater savings but also higher CPU utilization. Page-level compression is also applied to the object where row compression is performed. Relatively complex operations are performed on records – see more in the Microsoft documentation. These operations allow much less data to be physically stored and generate savings from table compression in the database.

How to Estimate Compression Savings?

SQL Server natively includes the system procedure sp_estimate_data_compression_savings, which analyzes the object and returns an estimate of storage savings.

After implementing compression, the size of the database data file will not change. It will remain the same. However, it will increase the space that is not allocated to data. If you want to reduce the size of the data file by the space that is not allocated, you would need to perform a DBCC database operation – shrink – be careful with this, as it is a time-consuming operation, and for databases with sizes of tens of gigabytes, it may take several hours.

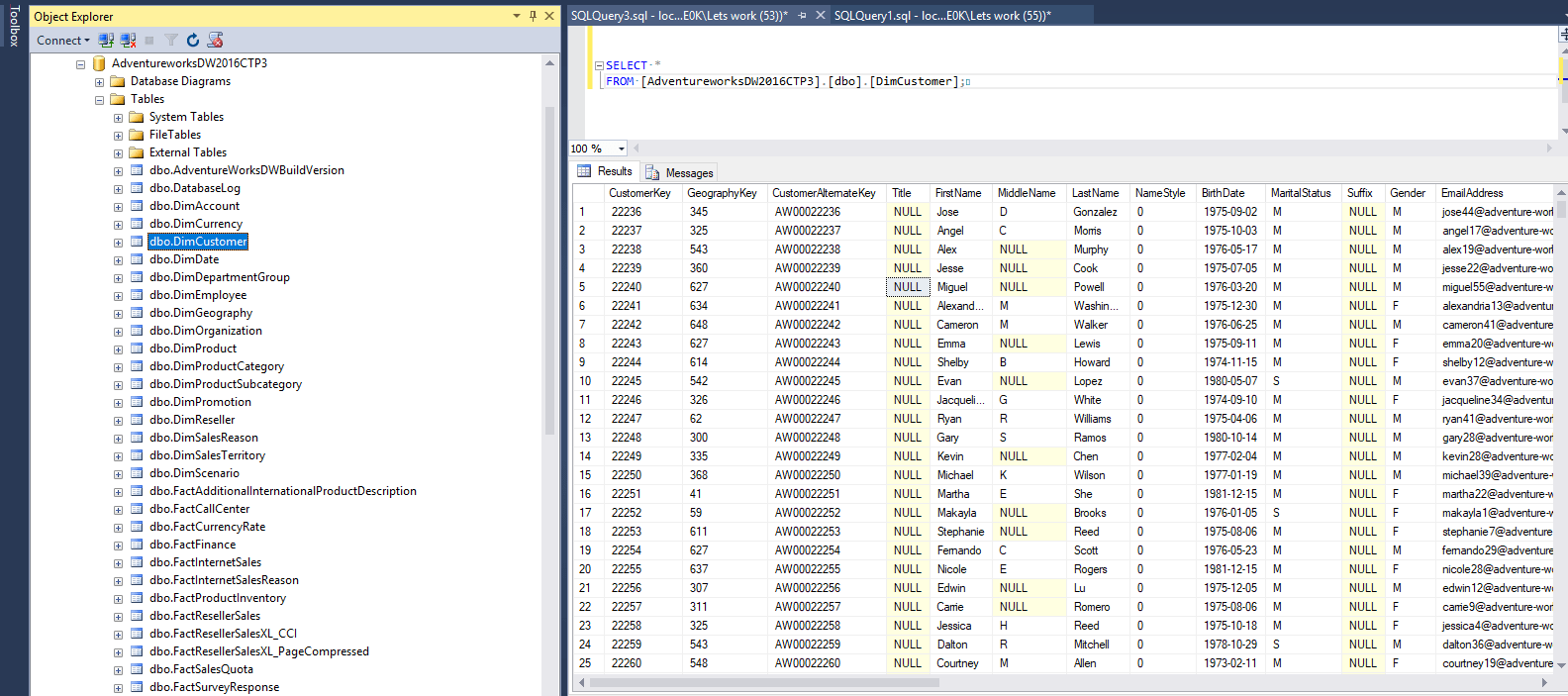

Let’s take a table with customers from the freely available Microsoft sample database (AdventureworksDW2016CTP3). The table is named DimCustomer and contains many text strings, numbers, and empty values. The table has no indexes and is stored in an unordered heap structure, which is ideal for testing.

What are the expected savings from row-level and page-level compression? You can find out by running the mentioned procedure, specifying the schema, object, and compression type. In our case, we run the procedure twice – for ROW and PAGE.

USE AdventureworksDW2016CTP3;GOEXEC sp_estimate_data_compression_savings 'dbo', 'DimCustomer', NULL, NULL, 'ROW' ;GO

USE AdventureworksDW2016CTP3;GOEXEC sp_estimate_data_compression_savings 'dbo', 'DimCustomer', NULL, NULL, 'PAGE' ;GO

Results of the Compression Savings Test

- Row-level compression (highlighted in red) – the expected storage savings are approximately 44% (7840-4408)/7840. The table contains many numbers, dates, and empty values that could be stored more efficiently.

- Page-level compression (highlighted in blue) – the expected savings in this case are almost 70% of storage. In this case, we benefit from row-level compression + more efficient storage of string strings, which are abundant in the table.

How to Compress a Table or Index?

- If you decide to implement ROW-LEVEL compression on an existing table, you can do so as follows:

ALTER TABLE [dbo].[DimCustomer]REBUILD PARTITION=ALL WITH (DATA_COMPRESSION=ROW);

- To create an empty table compressed via rows:

CREATE TABLE [Test] ([cislo] INT not null) WITH (DATA_COMPRESSION = ROW )

How to Decompress a Table?

Just as we compressed an existing table in the previous step, we can also perform decompression (DATA_COMPRESSION set to NONE).

ALTER TABLE [dbo].[DimCustomer]REBUILD PARTITION=ALL WITH (DATA_COMPRESSION=NONE);